The goal of AI orchestration is to provide a single, unified platform for teams to oversee and manage AI-related workflows across the entire organization. This post describes the ideal AI orchestration solution and the technologies that make it work, helping companies use artificial intelligence more efficiently.

AI challenges to overcome

The challenges an organization must overcome to use AI more cost-effectively and see faster returns can be broken down into three categories:

- Overseeing AI-led workflows to ensure models are behaving as expected and providing accurate results, when these workflows are spread across the enterprise in different geographic locations and vendor-specific applications.

. - Efficiently provisioning, maintaining, and scaling the vast infrastructure and computational resources required to run intensive AI workflows at remote data centers and edge computing sites.

. - Maintaining 24/7 availability and performance of remote AI workflows and infrastructure during security breaches, equipment failures, network outages, and natural disasters.

These challenges have a few common causes. One is that artificial intelligence and the underlying infrastructure that supports it are highly complex, making it difficult for human engineers to keep up. Two is that many IT environments are highly fragmented due to closed vendor solutions that integrate poorly and require administrators to manage too many disparate systems, allowing coverage gaps to form. Three is that many AI-related workloads occur off-site at data centers and edge computing sites, so it’s harder for IT teams to repair and recover AI systems that go down due to a networking outage, equipment failure, or other disruptive event.

How AI orchestration streamlines AI/ML in an enterprise environment

The ideal AI orchestration platform solves these problems by automating repetitive and data-heavy tasks, unifying workflows with a vendor-neutral platform, and using out-of-band (OOB) serial console management to provide continuous remote access even during major outages.

Automation

Automation is crucial for teams to keep up with the pace and scale of artificial intelligence. Organizations use automation to provision and install AI data center infrastructure, manage storage for AI training and inference data, monitor inputs and outputs for toxicity, perform root-cause analyses when systems fail, and much more. However, tracking and troubleshooting so many automated workflows can get very complicated, creating more work for administrators rather than making them more productive. An AI orchestration platform should provide a centralized interface for teams to deploy and oversee automated workflows across applications, infrastructure, and business sites.

Unification

The best way to improve AI operational efficiency is to integrate all of the complicated monitoring, management, automation, security, and remediation workflows. This can be accomplished by choosing solutions and vendors that interoperate or, even better, are completely vendor-agnostic (a.k.a., vendor-neutral). For example, using open, common platforms to run AI workloads, manage AI infrastructure, and host AI-related security software can help bring everything together where administrators have easy access. An AI orchestration platform should be vendor-neutral to facilitate workload unification and streamline integrations.

Resilience

AI models, workloads, and infrastructure are highly complex and interconnected, so an issue with one component could compromise interdependencies in ways that are difficult to predict and troubleshoot. AI systems are also attractive targets for cybercriminals due to their vast, valuable data sets and because of how difficult they are to secure, with HiddenLayer’s 2024 AI Threat Landscape Report finding that 77% of businesses have experienced AI-related breaches in the last year. An AI orchestration platform should help improve resilience, or the ability to continue operating during adverse events like tech failures, breaches, and natural disasters.

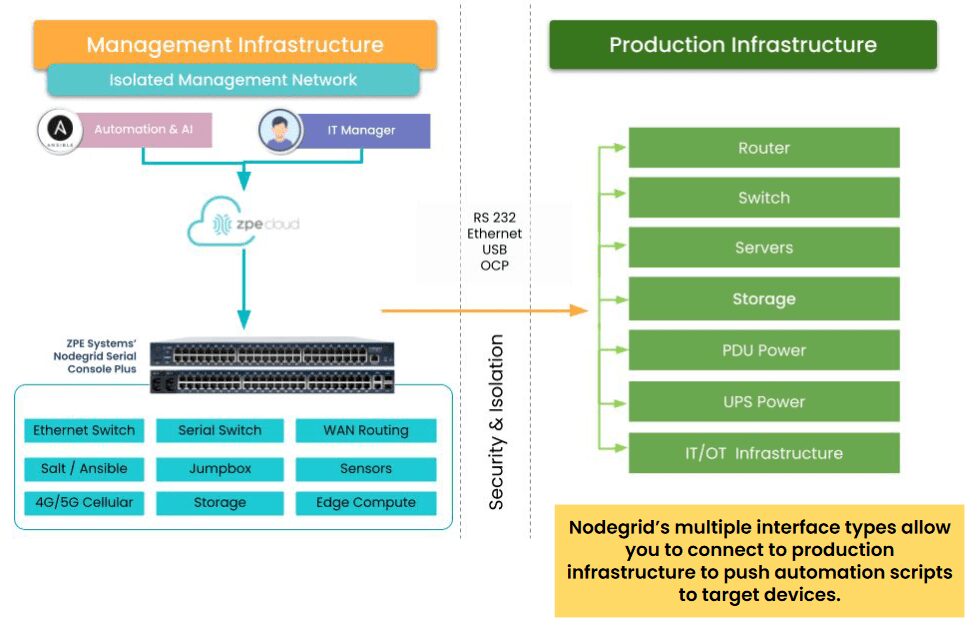

Gen 3 out-of-band management technology is a crucial component of AI and network resilience. A vendor-neutral OOB solution like the Nodegrid Serial Console Plus (NSCP) uses alternative network connections to provide continuous management access to remote data center, branch, and edge infrastructure even when the ISP, WAN, or LAN connection goes down. This gives administrators a lifeline to troubleshoot and recover AI infrastructure without costly and time-consuming site visits. The NSCP allows teams to remotely monitor power consumption and cooling for AI infrastructure. It also provides 5G/4G LTE cellular failover so organizations can continue delivering critical services while the production network is repaired.

Gen 3 OOB also helps organizations implement isolated management infrastructure (IMI), a.k.a, control plane/data plane separation. This is a cybersecurity best practice recommended by the CISA as well as regulations like PCI DSS 4.0, DORA, NIS2, and the CER Directive. IMI prevents malicious actors from being able to laterally move from a compromised production system to the management interfaces used to control AI systems and other infrastructure. It also provides a safe recovery environment where teams can rebuild and restore systems during a ransomware attack or other breach without risking reinfection.

Getting the most out of your AI investment

An AI orchestration platform should streamline workflows with automation, provide a unified platform to oversee and control AI-related applications and systems for maximum efficiency and coverage, and use Gen 3 OOB to improve resilience and minimize disruptions. Reducing management complexity, risk, and repair costs can help companies see greater productivity and financial returns from their AI investments.

The vendor-neutral Nodegrid platform from ZPE Systems provides highly scalable Gen 3 OOB management for up to 96 devices with a single, 1RU serial console. The open Nodegrid OS also supports VMs and Docker containers for third-party applications, so you can run AI, automation, security, and management workflows all from the same device for ultimate operational efficiency.

Streamline AI orchestration with Nodegrid

Contact ZPE Systems today to learn more about using a Nodegrid serial console as the foundation for your AI orchestration platform. Contact Us