Every IT person knows that network updates are routine. Sometimes they can work perfectly, and other times, the update messes things up and you have to roll back to the last good configuration.

But what do you do when the rollback goes wrong?

Here’s my first-hand experience with this exact scenario.

We recently implemented what was intended to be a routine update for our engineering network. The change timeframe, internal signoff, test coverage, and rollback strategy were all set up. Every step was even pre-documented. The setup was textbook.

But if you’ve been in IT for more than five minutes, you know that upgrades don’t always fail in the first stage.

The initial steps had gone smoothly and we had a sense of confidence. But midway through, this one failed. It damaged a core routing service and stopped our ability to reach our remote sites.

No big deal. We had a step-by-step rollback plan that we validated in the lab. We even walked through it with a dry run.

Then things took an unexpected turn.

Our rollback failed!

Why? In the background, one dependent service was automatically upgraded. This silently triggered a chain reaction. We found ourselves dealing with Entra ID login loops, DHCP failures, and version mismatches across multiple services. Our internal DNS collapsed. With DNS gone, so was access to our identity provider, our management tools, and even our door badge system.

The access control system was no longer available to us. It was one of those nights. The rollback was supposed to save us, but what could save us from the rollback?

Luckily, we had out-of-band management

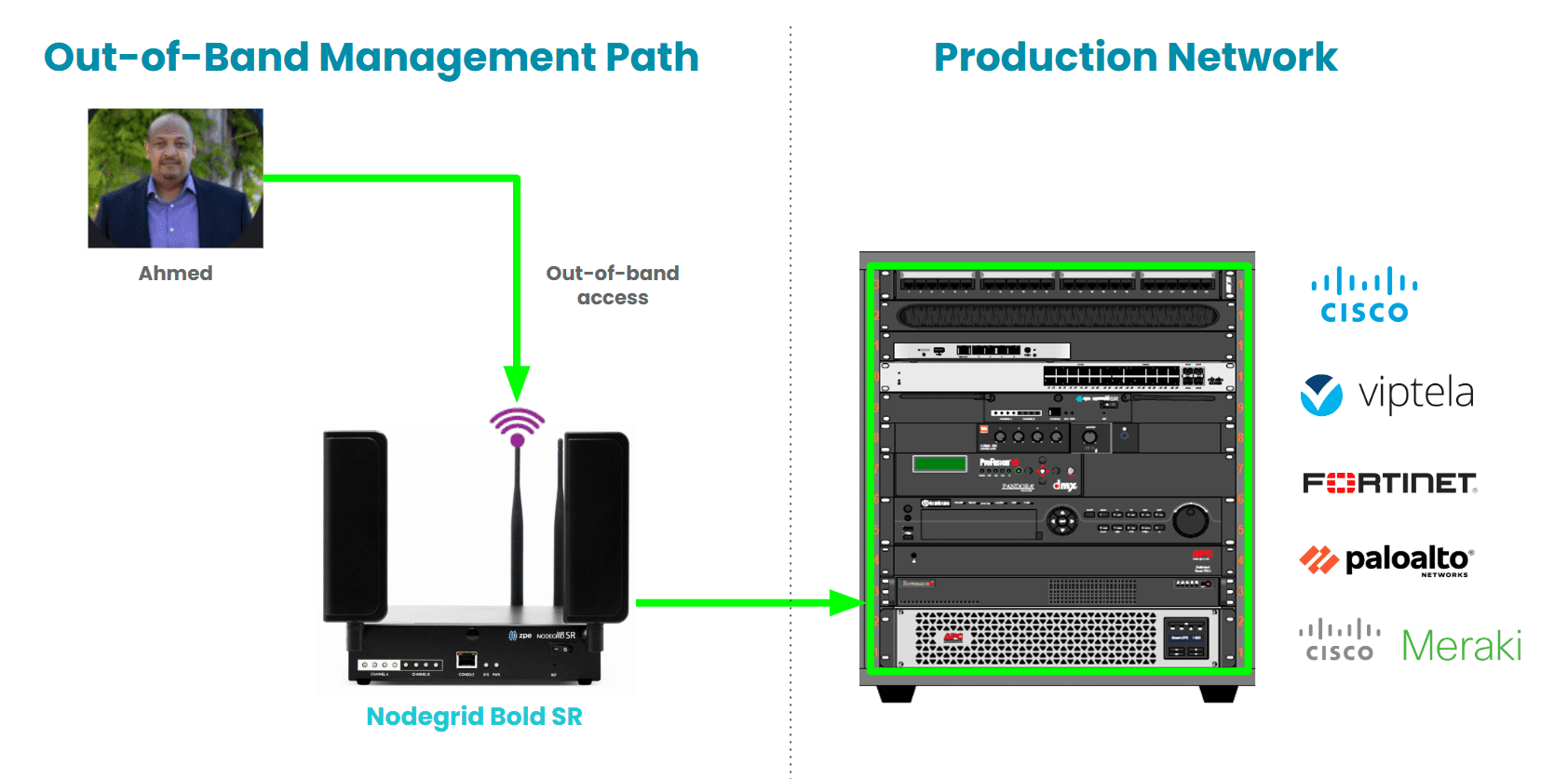

We were saved by Out-of-Band (OOB) management through our ZPE architecture.

We used secure OOB serial and cellular failover. That gave us direct control of the devices, even when the core network was down and identity services were unreachable. We stayed operational.

Image: The out-of-band management path is a dedicated access network. It acts as a safety net for instances when a rollback fails and recovery processes must take place.

Fortunately, we had already segmented the engineering network from the business network. That isolation meant the failure didn’t spread. We could take our time rebuilding the broken pieces without impacting customer operations or internal productivity tools.

All services were back up and running within a few hours.

What did we learn?

I’m posting this because a lot of IT teams, particularly those in growth-stage businesses, neglect early architecture segmentation or OOB access. It is considered a “Phase 2” assignment. However, it’s the only way out should things go wrong, which they will.

Here’s what we learned:

- The quality of your assumptions determines how well your rollback strategy works. A rollback that depends on “nothing else changes” is fragile by design.

- DNS, Identity (like Entra ID), and VPN are interdependent. They form a delicate triangle, and when one goes, the others often follow.

- Out-of-Band is a fundamental design need, not just a catastrophe recovery tool. If you’re managing remote or critical infrastructure, there is no substitute for direct, independent access.

- Documentation is important. Access is more important. All the runbooks in the world won’t help if you can’t reach the system that runs them.

Prepare for failure. Walk through your worst-case scenario. Don’t count on luck to save you.

Watch this demo on how to roll back and recover

My colleague Marcel put together this demo video which shows how to access, configure, and recover infrastructure, even if you’re thousands of miles away.

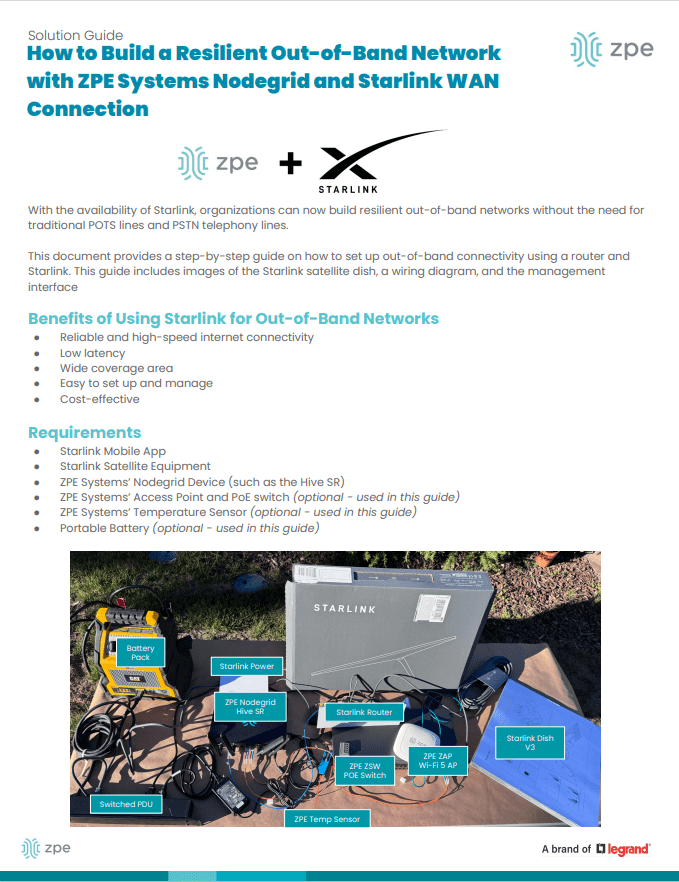

Set Up Your Own Out-of-Band Management With Starlink

Download this guide on how to set up an out-of-band network using Starlink. It includes technical wiring diagrams and a guided walkthrough.

You can download it here: How to Build Out-of-Band With Starlink